C++ | LUA | CMAKE | GITHUB

Complex and interactive simulations

Development Team

Matthew Davis

Rhys Mader

Aaron Koenig

Chase Percy

Project Planning and Tools

- Weekly meetings

- Project Management

- Jira

- Kanban Board

- Git with GitHub for source control

- Git Flow Model

- Git LFS

- Branch Protection

- Doxygen

- CI/CD

- Static Analyzer (CPPCheck)

- Automated unit tests

Overview

This was the capstone unit for the games technology major in my degree. This unit was focused on simulations and using the information we learnt through the games tech major to visually display and interact with these simulations. The physics simulation involved designing and developing linear and angular collision resolution for many objects in a scene. The AI simulation was about designing affordances and behaviours for our AI with ways they interact with the user, environment, and each other. This was a challenging unit that pushed the design of our game engines and simulation components. Time was a major limiting factor when doing this unit but overall I am happy with the result our team delivered!

Features developed by me

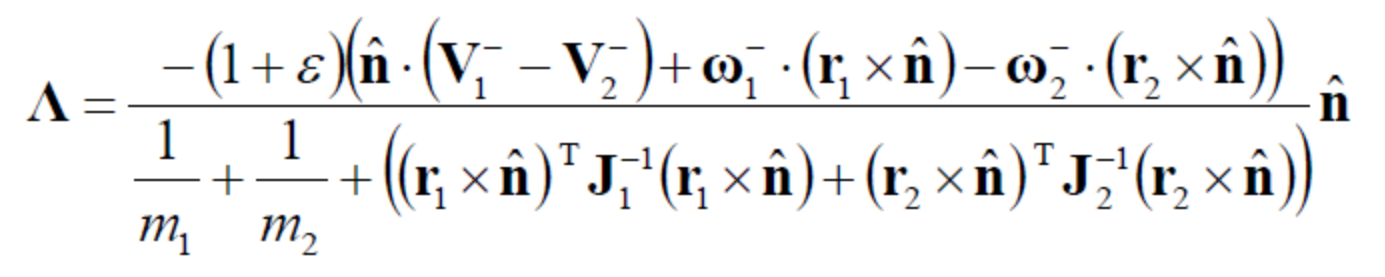

Physics Collision Resolution

The overall design for the rigid bodies was done by my teammate Rhys, but the implementation and physics related aspects were implemented by me. This was a difficult process that required many tests and verification using simulation data passed through MATLAB. De-penetrating objects and allowing them to come to rest were the hardest parts to solve as they aren’t physics related problems and instead are problems with the speed in which they need to be calculated for real-time rendering.

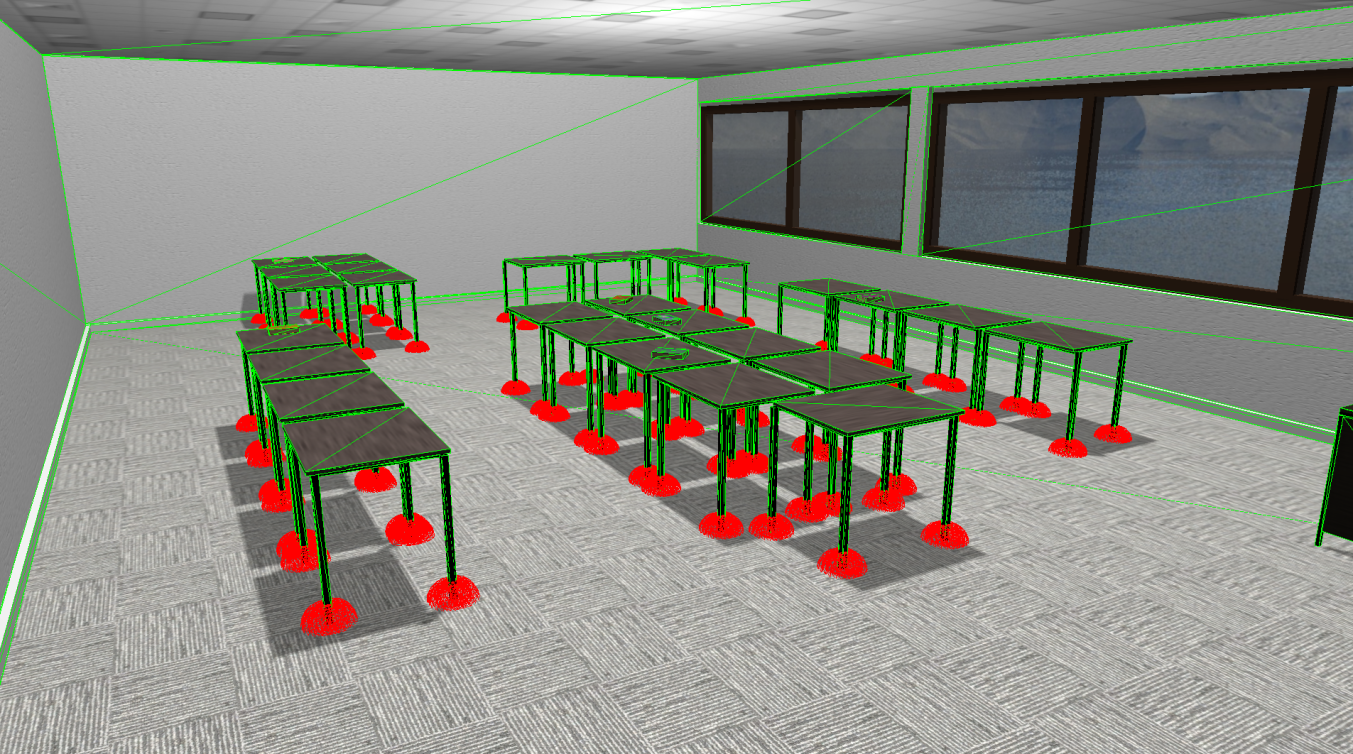

Physics Debug Renderer

The debug renderer was implemented using a strategy pattern to decouple the debug data collection and rendering components. Different renderers can be used to display the information in different ways and different collection methods can be used while not interfering with each other. This was important as there was a lot of data to process, and we wanted the ability to easily change how we debugged the physics simulation without having to understand the other component.

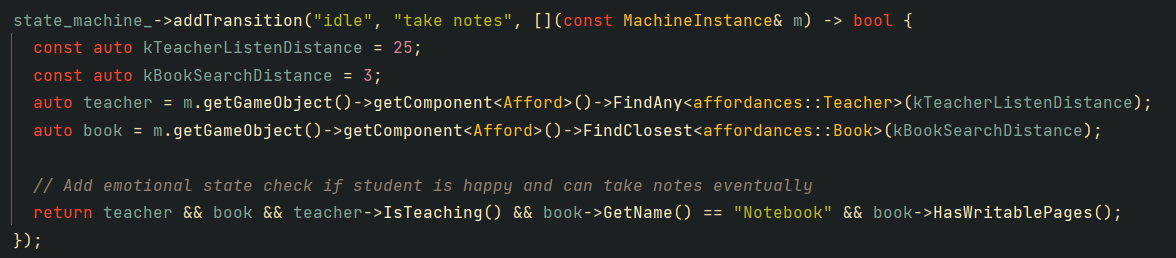

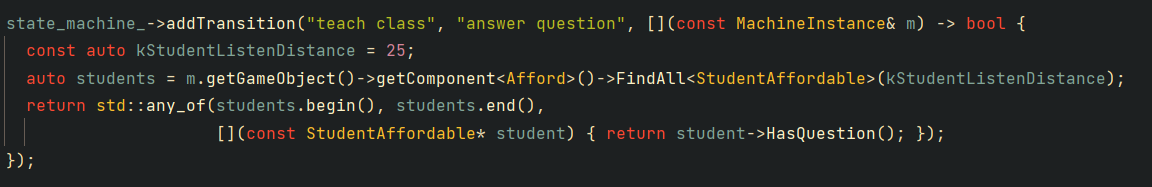

Affordances

The affordance system was designed to be flexible with our Entity Components and also with its implementation. When designing it I was only interested in the higher level triggering and passing of information, not the lower level implementation as that would be done by the user of the affordance system later. Below are a few ways it can be used:

Afford objects directly, assuming the agent knows and understands the objects around it and what they can do.

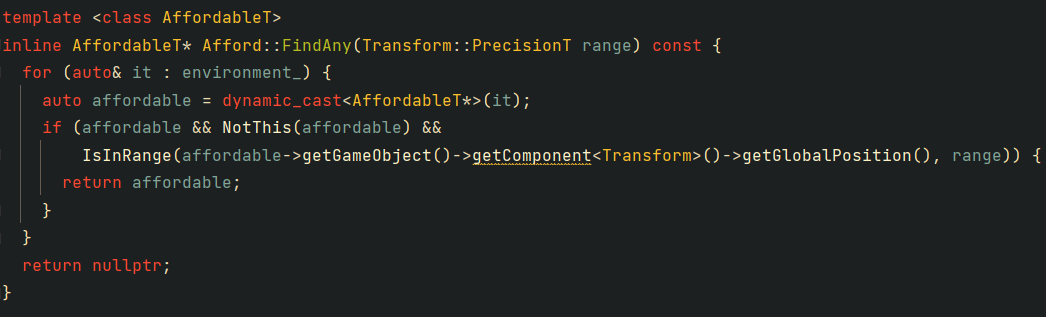

How affordable objects are found using the FindAny method.

Afford all objects of a type within range in the environment and then batch process them.

There are a few other ways it can be used, strings can be passed instead of using static casts to determine the objects type. It can also find anything in the environment with a range and allow the agent to then process those individually and afford what they can do from their list of possible affordances provided by a separate method.

Shadows

I added shadows to help with the depth perception when viewing the physics simulation. A simple shader was created that renders all objects in the scene based on closest distance from the light source to a texture which is then referenced when rendering normally to apply a shadow if it is depth relative to the light source is greater than the depth in the texture map. If its behind another object a darkening is applied to the colour that is rendered, giving it a shadow effect.

Agents

The agents in the scene were implemented in a basic way due to time restraints. They had emotional systems and interacted with the environment but for simplicity they didn’t physically interact or move around it. To help display what the agents were doing and what their dominate emotional state was I added a billboard system to display this information using simple text and an emoji. The emotional states and transitions of the agents were designed as a team but the implementation was left to me and Matt.